Third there’s the production technique, some charts like CMP’s Digital Target are produced on highend inkjets, which implies the target is very vulnerable to moisture making it more fragile than other targets. But if one wants to do LUT profiles more patches tends to be better. When one only want to generate a color matrix this is not of particularly great relevance, the ColorChecker’s 24 patches are enough.

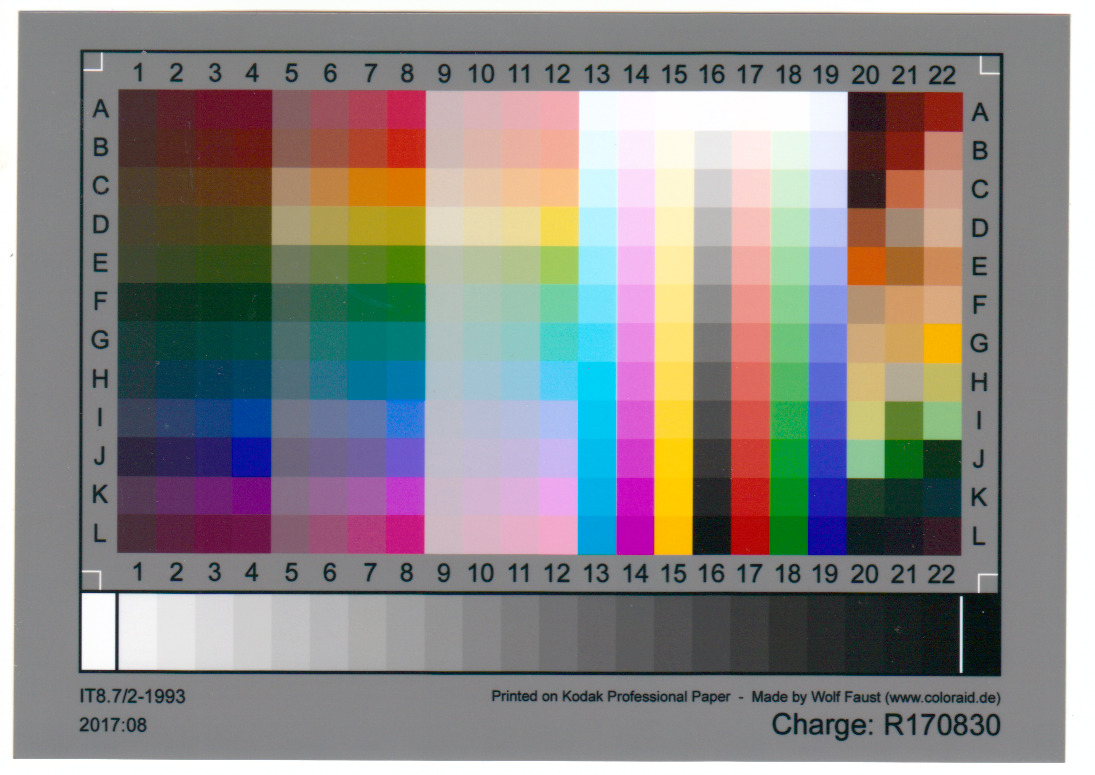

#FAUST IT8 TARGET PATCH#

Second there’s the patch count, Gretag’s Classic ColorChecker only has 24 patches, while most IT8 targets have up to 288 patches, and CMP’s Digital Target even has 570 patches. Semigloss targets are often shot best outdoors on a sunny non-cloudy day at an angle to prevent glare. Most IT8 targets are semigloss, CMP’s Digital Target for example is matte… I personally prefer matte targets for their convenience since they are easily shot using a decent strobe, which is often hard to do with semigloss target. Here are some of the things you need to keep in mind…įirst we can classify all targets into two groups, matte and semigloss… In theory semigloss targets can cover a larger gamut (range of colors) than matte targets, but most semigloss targets tend to glare, which is a big inconvenience when shooting the target. There are currently lots of color targets available on the market produced using different techniques and sold at very different prices. Pretty much all digital SLR camera’s are covered as well… What color target do I need? Probably half of all digital bridge camera’s can output RAW and thus can be profiled as well. This effectively means that most compact camera’s can not be profiled (yes, some could using the CHDK firmware hack).

What digital camera’s can be color profiledĪll camera’s that can output a digital RAW format supported by RawSpeed can be properly profiled. This is also the reason why we are sticking to color matrices instead of supplying more detailed LUT profiles (besides diskspace usage). XYZ matrices don’t have that problem since they are defined by only 3×3 coordinates in XYZ colorspace, and thus are quite generic by their very nature. So when generating a LUT profile, the profile is likely to pick up some of the peculiarities of your particular camera. The nice thing about LUTs is that they can deal with slight (nonlinear) deviations and can even be tweaked for creative purposes. What’s a LUT, it’s a lookup table… The big difference is that with a XYZ matrix all color transformations are calculated on the fly, while LUTs are precalculated, so transforming color via a LUT is simply looking up an input color and it’s matching output color. Without going into all the nitty gritty details, there are basically two kinds of profiles, the first are XYZ matrices (these are often called color matrices) and are typically combined with a gamma curve, the second are LUT profiles.

#FAUST IT8 TARGET SKIN#

So ideally we want an alternate enhanced color matrix that will render red as it’s supposed to, possibly at the cost of some skin tones rendition (rendition of skin tones is often a matter of taste, so we can fix that using the color zones “natural skin tones” preset). The only explanation I can think of is that this has been a deliberate compromise on Adobe’s part to safely render skin tones, at the cost of red rendition elsewhere.

To this day I still have no clue why this really is a problem. Historically there have been some problems with the Adobe color matrices, the biggest of these is the rendition of red colors.

:max_bytes(150000):strip_icc()/Advanced_IT8_Target-1f82c9f0fbf34aaa96ba9988af8022c6.jpg)

Color matrices are specifications on how camera native color is transformed into something that an end user might like, and ideally will be correct when viewed on a calibrated display. In the past some good work on this topic has been done by Adobe, who published their DNG specification including color matrices. The problem is that camera vendors don’t publish their proprietary postprocessing methods, which leaves us with a problem, how do we postprocess then? Which is good if we want maximum control and flexibility. Now when working in the RAW format we ditch all that postprocessing in favor of RAW sensor data. The pretty images our camera’s output aren’t literally what the camera sensors see, there is a lot of proprietary postprocessing involved. So here we go again, with a vengeance… Why? Since then I’ve pretty much switched to Darktable, and I’ve learned a few things. In the past I wrote about profiling for camera profiling for UFRaw.

0 kommentar(er)

0 kommentar(er)